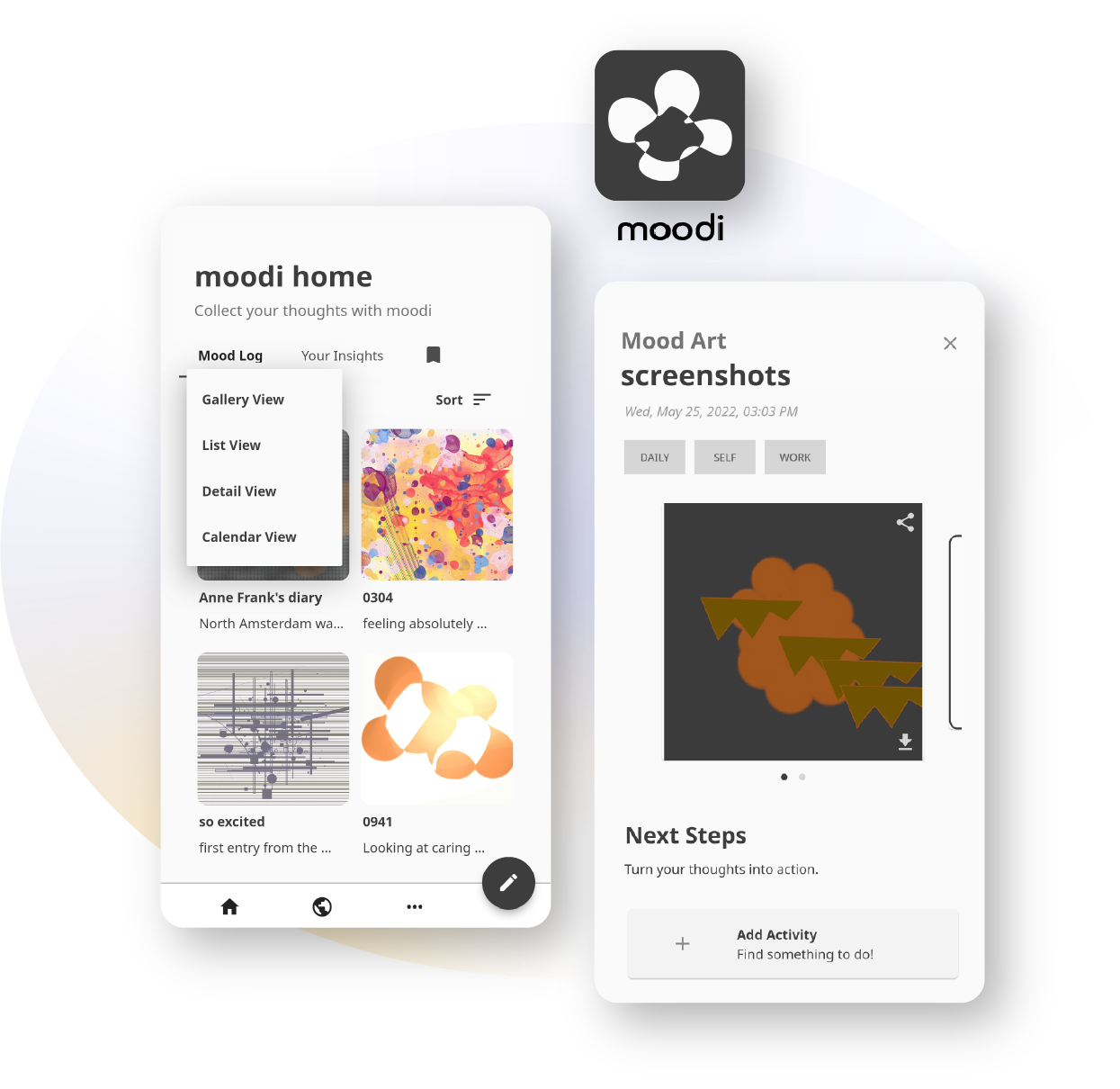

Moodi is a diary app that records your text entries as artworks.

Through natural language processing, moodi analyzes and “tokenizes” your text entry into digital art for

you to openly share it with the world.

The generated artworks are abstract representations of your input and are less explicit and revealing —

until you are in the mood to dig deep and reread.

Accumulating entries through time offers a unique point of view into your thought journey.

RISD Spring 2022 Senior Studio

Duration: Three months

Self-directed project

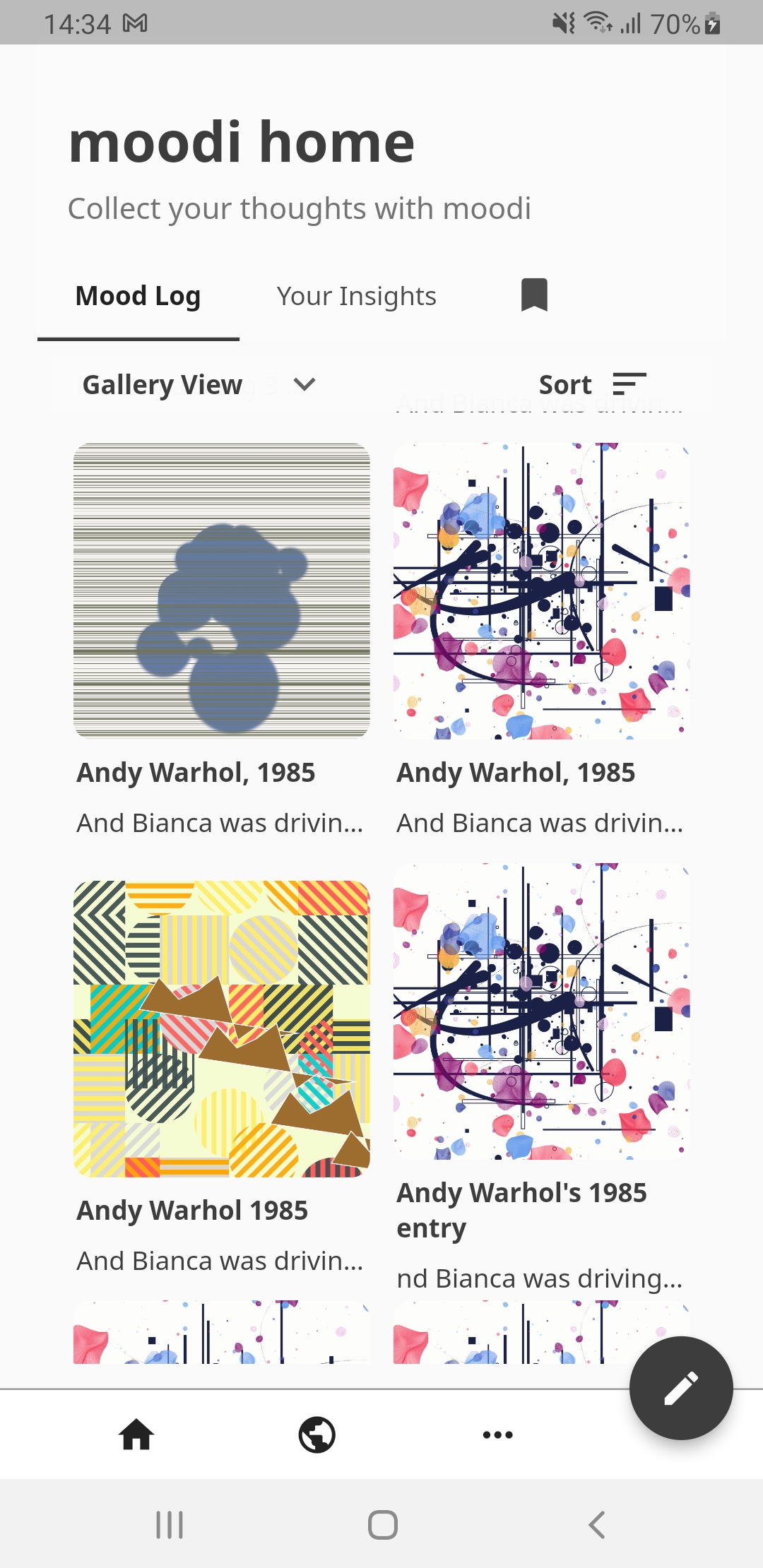

This walkthrough is made up of screen recorded interactions with the Android application. The text input was a snippet from The Diary of a Young Girl by Anne Frank:

“North Amsterdam was very heavily bombed on Sunday. There was apparently a great deal of destruction. Entire streets are in ruins, and it will take a while for them to dig out all the bodies. [...] It still makes me shiver to think of the dull, distant drone that signified the approaching destruction.”

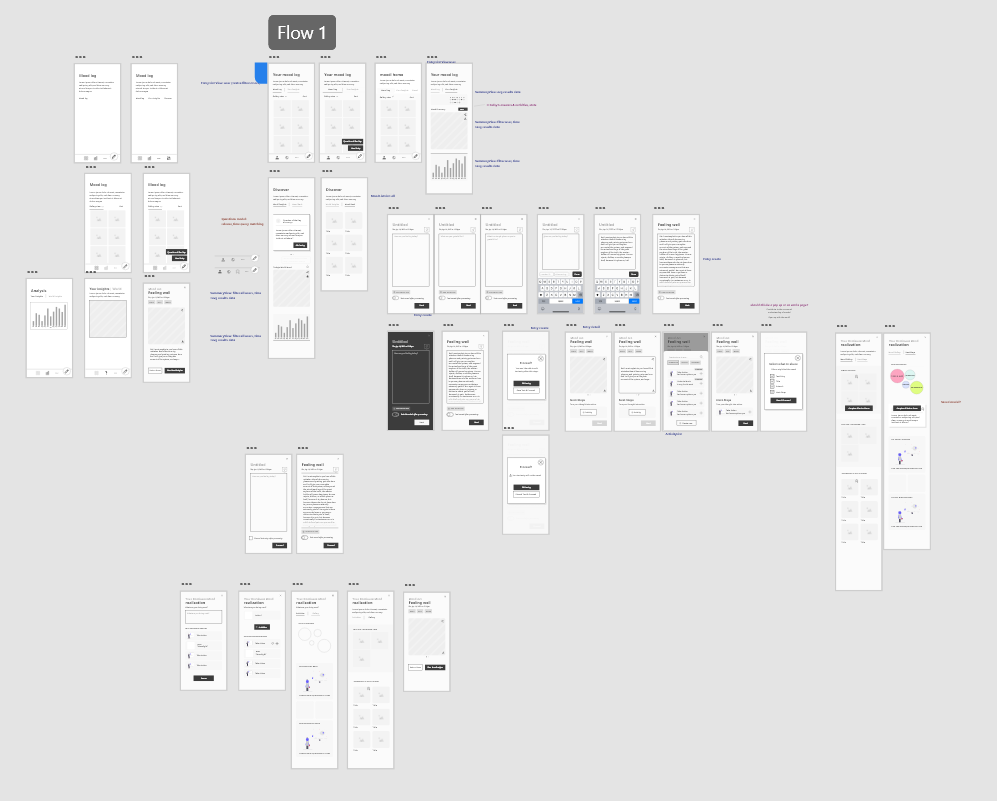

Primary flow

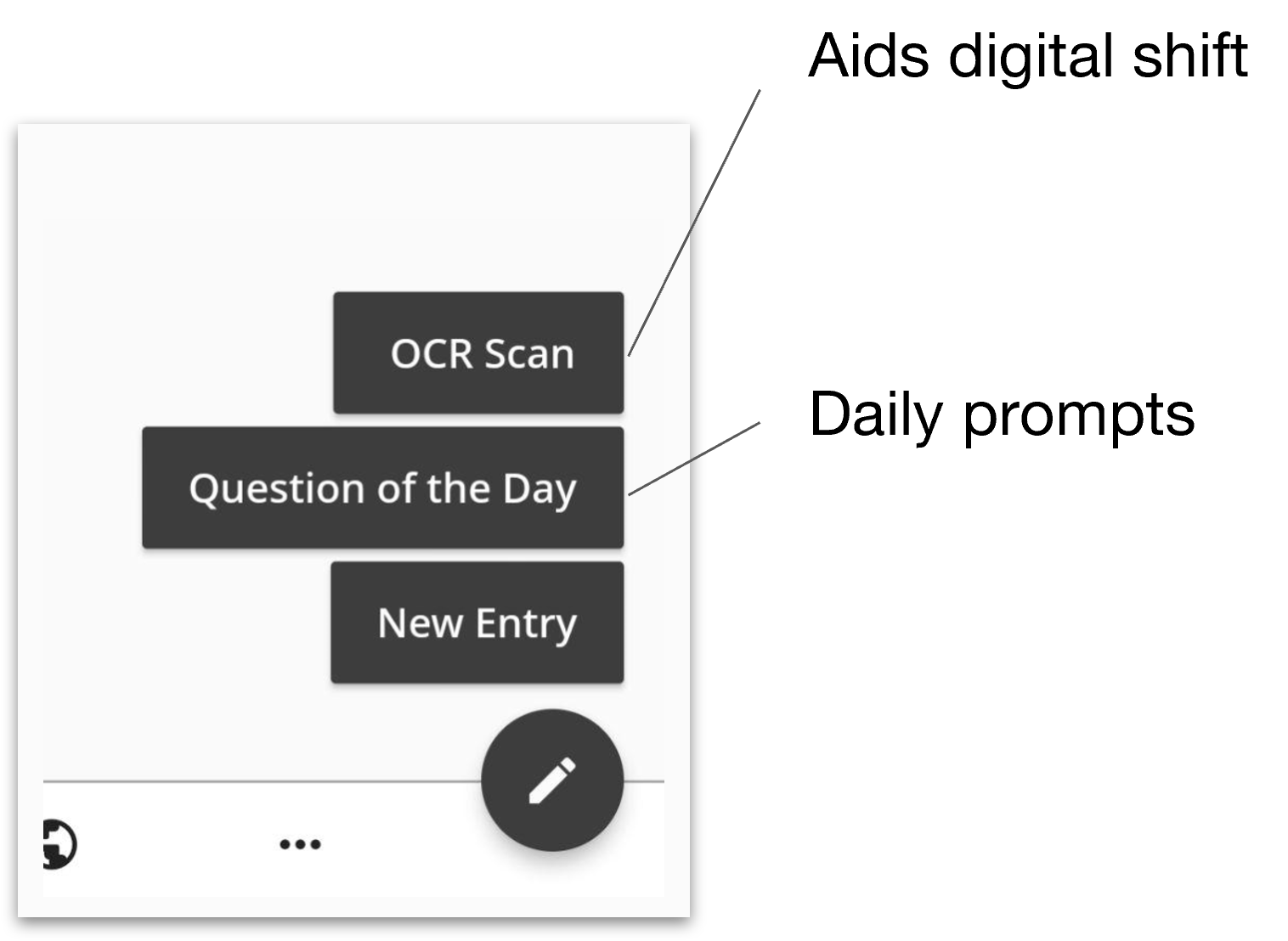

From Home, there are three speculative ways to add an entry.

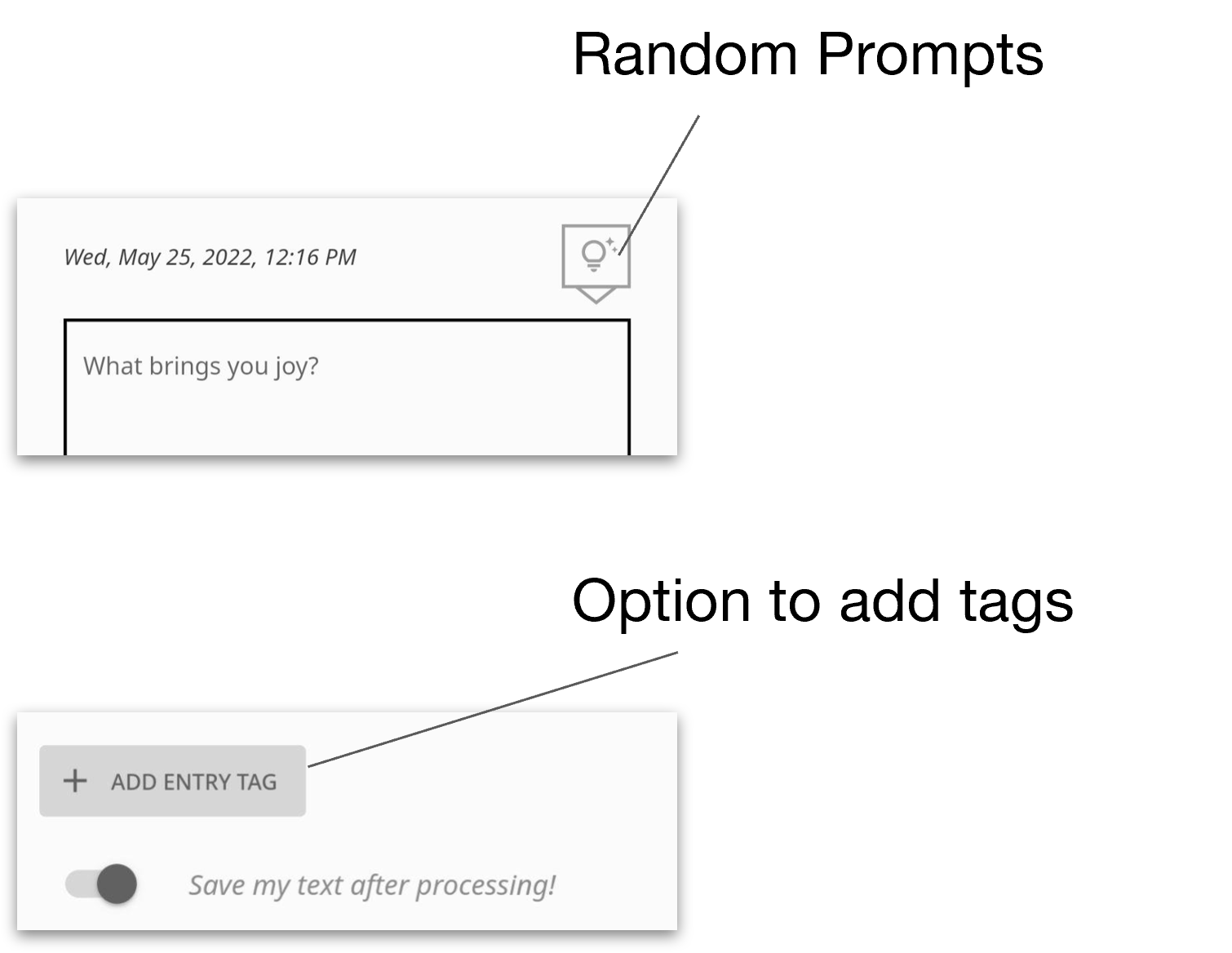

Random Prompts and tagging are included as extra features.

Art is generated from text entry.

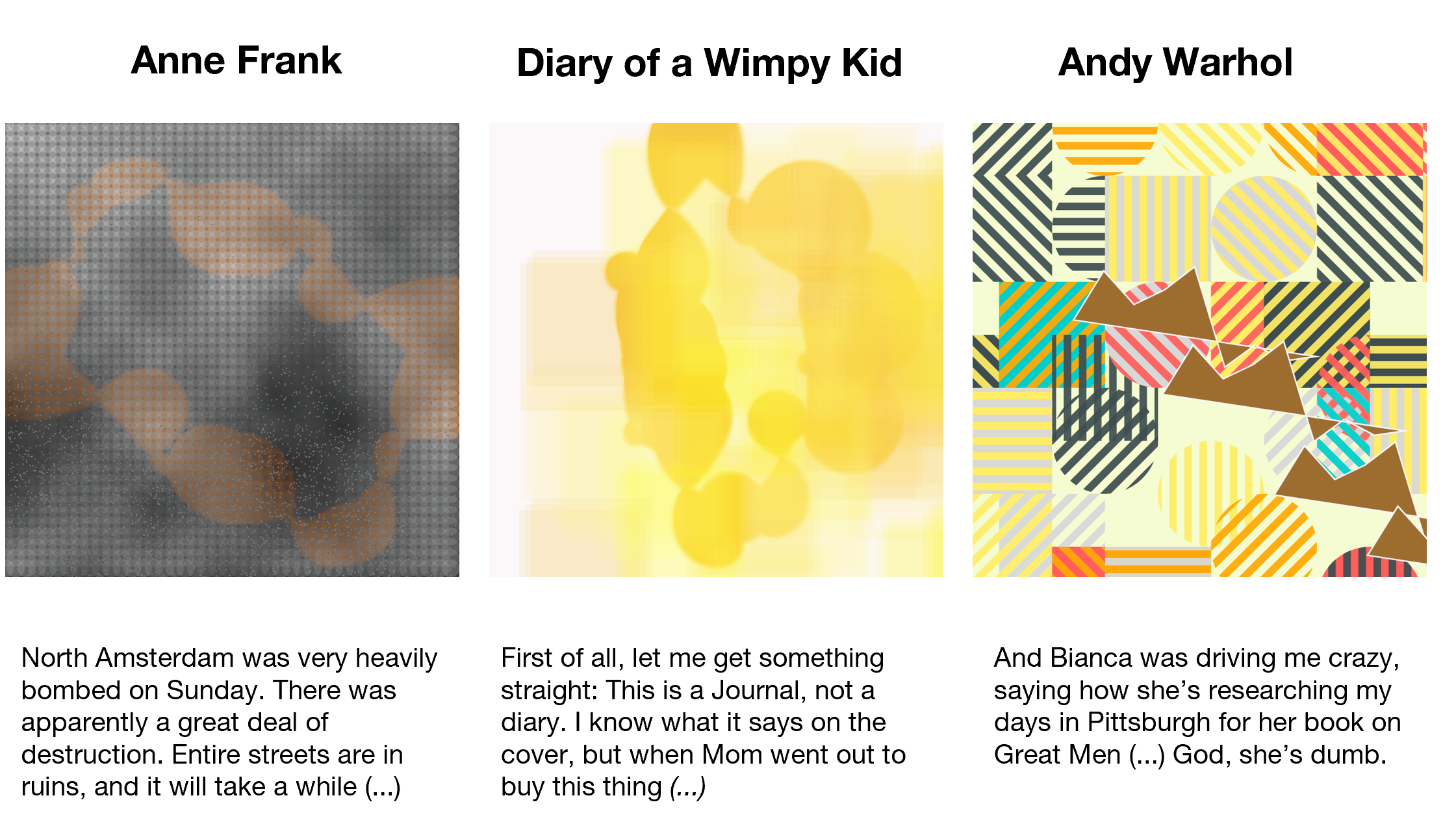

I was pleasantly surprised with the

results, which matched my personal impression of the authors’ characters and the attitude of

each entry.

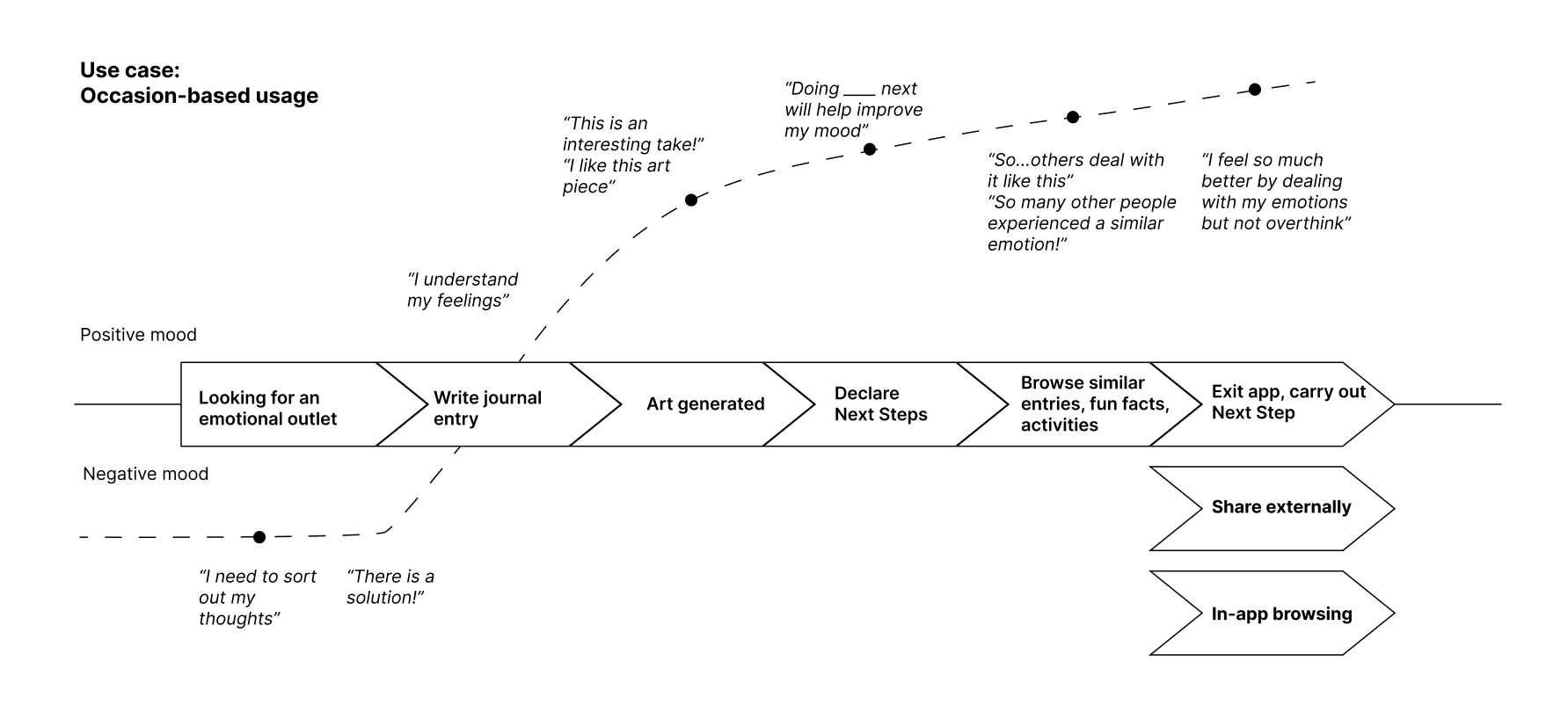

After the artwork is generated, users are prompted to add or select “Next

Step(s)”.

This step is to

shift gears from emotional thinking to critical thinking, preventing excessive rumination.

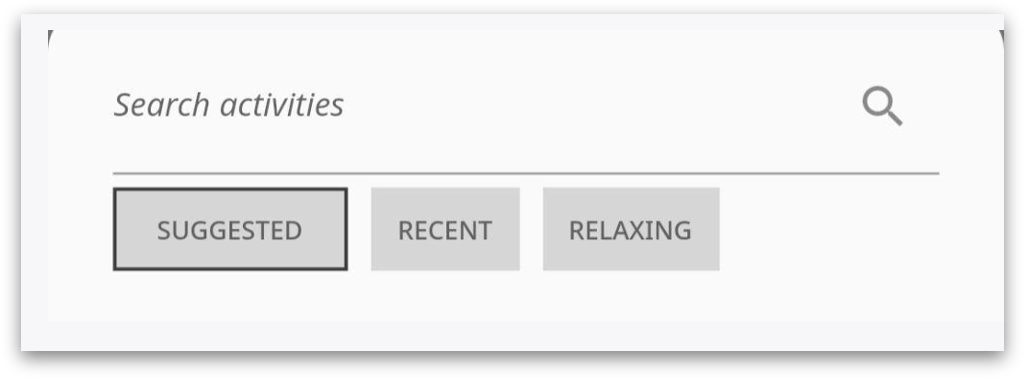

“Next Steps” activities can be found based on scientific recommendation for the mood identified,

popular activities chosen by other users for that mood, or previously selected moods... etc.

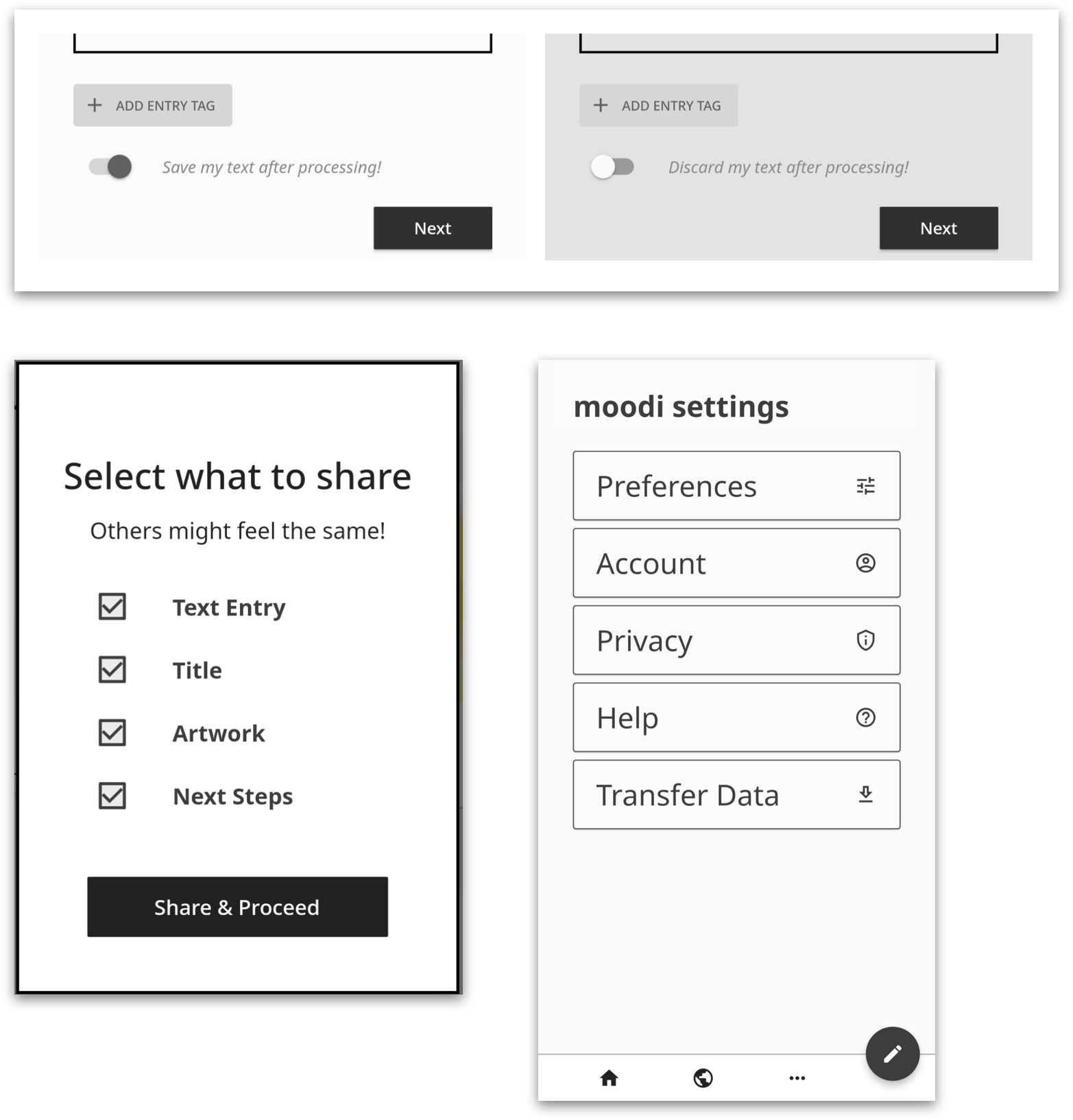

Moodi functions perfectly without storing users’ text entries. Users have

total control of

what to save and publicize in-app.

Moodi UI highlights the fact that users are in control (e.g.

background shift colors when users choose to discard text after processing).

Dominant Mood:

Mood Gallery

See and find anonymous community through similar mood results

Dominant Mood:

Next Steps

Both moodi curated and user generated activities for the mood

Finishing up: showing the user their identified dominant mood.

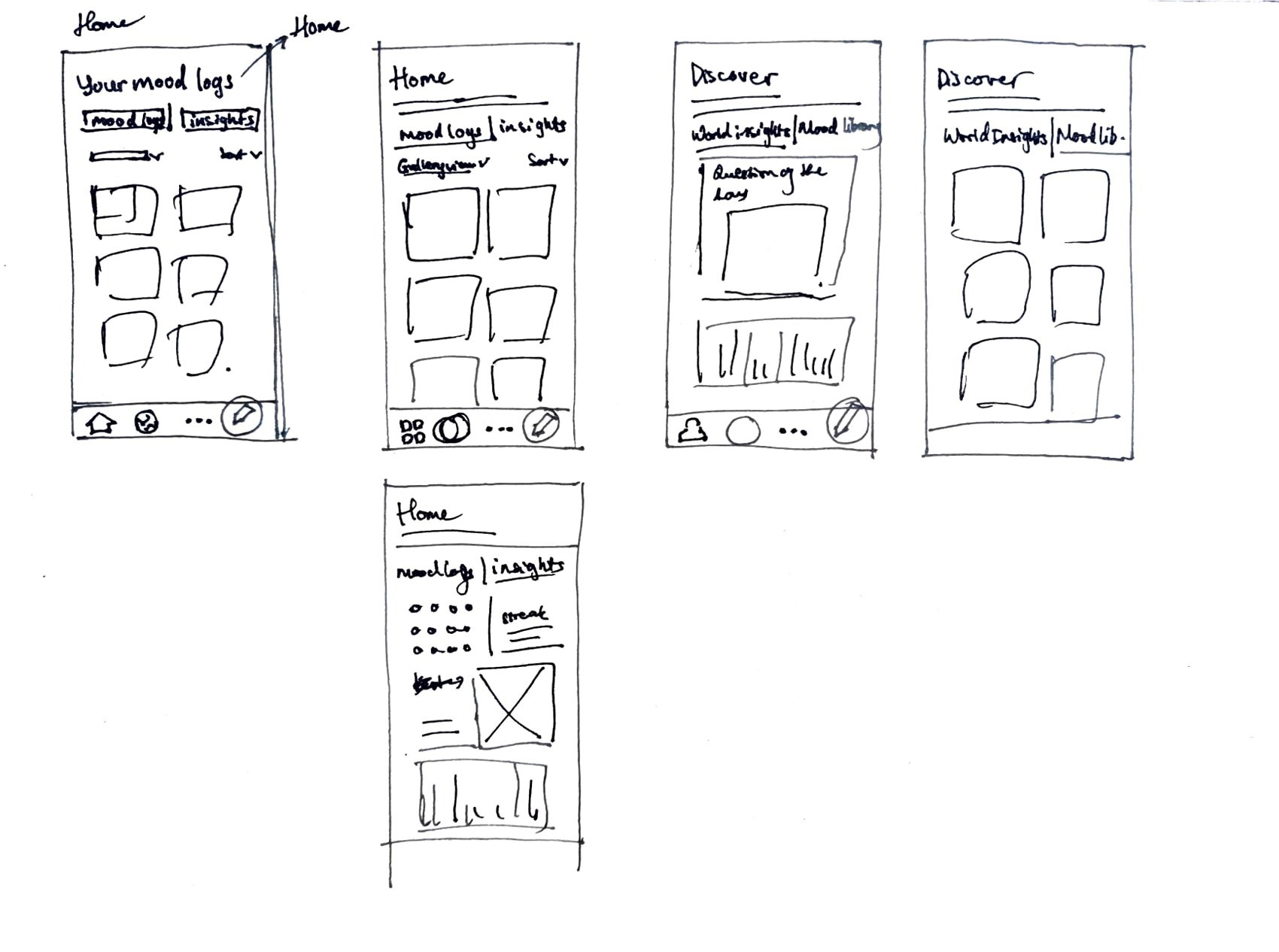

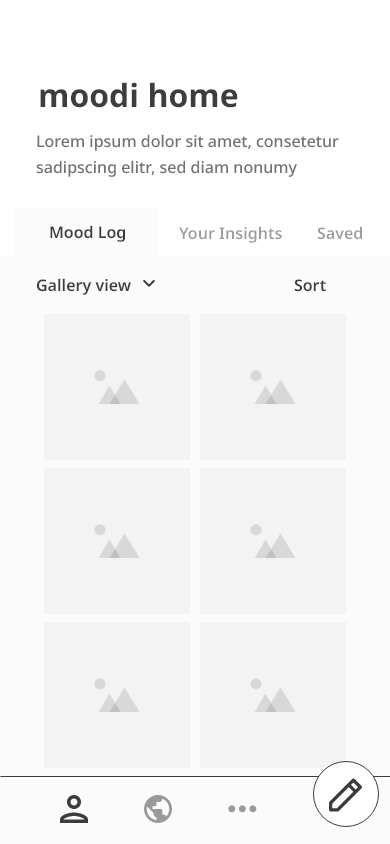

Main Features

home

Home

Mood Log:

Public entries, tips, and facts can be saved

Users are in control of their own log feed

Your Insights:

Gain more insights through periodically generated artworks and reports

public

Discover

World Insights: Shows what the world is feeling in a select period of time

Mood Bank: A “dictionary” of moods, quick access to mood-relevant activities and data

Moodi web application was an precursor project of translating text sentiment

into p5.js generated digital art, completed in 4 weeks.

This web app has a basic database structure, Django backend, wireframe UI, and limited AI

components and output.

While the focus of this initial project was p5.js, this mobile app version of moodi is an outcome of

initial consideration of users, use case, and pushing it closer to a marketable product.

More on the first iteration of moodi here.

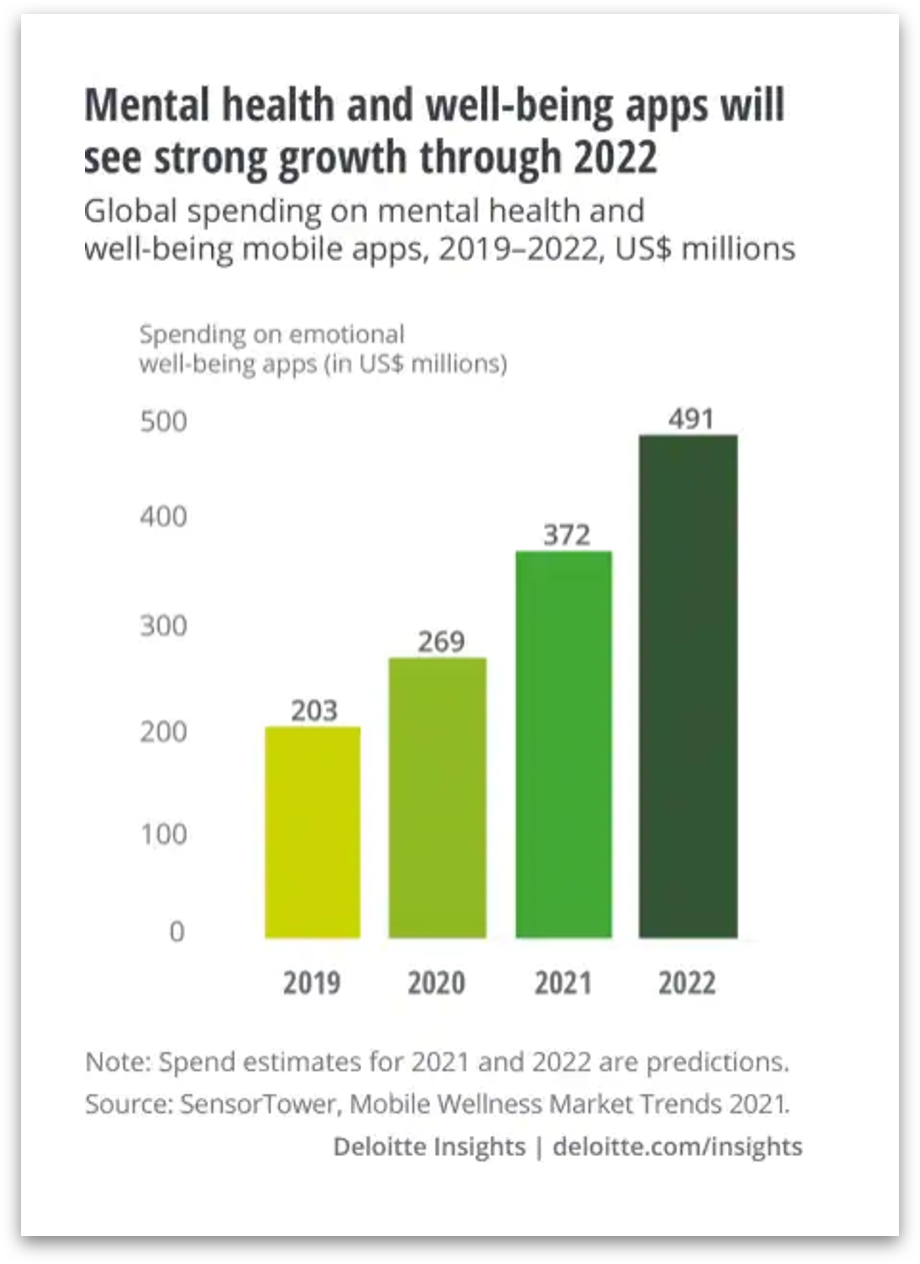

TLDR: The recent mental health and mindfulness digital services market boom stems from an overall rising interest in wellness and accelerated by the global pandemic.

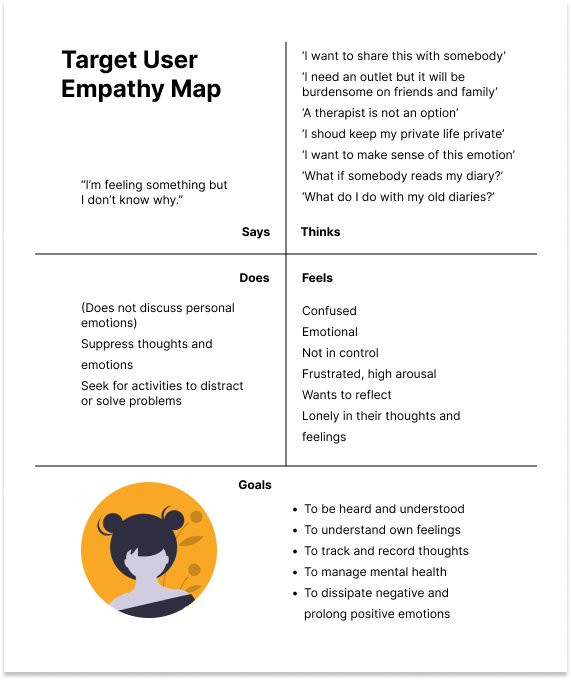

An empathy map was created based on these needs, centered around journal keeping as a personal device for maintaining mindfulness.

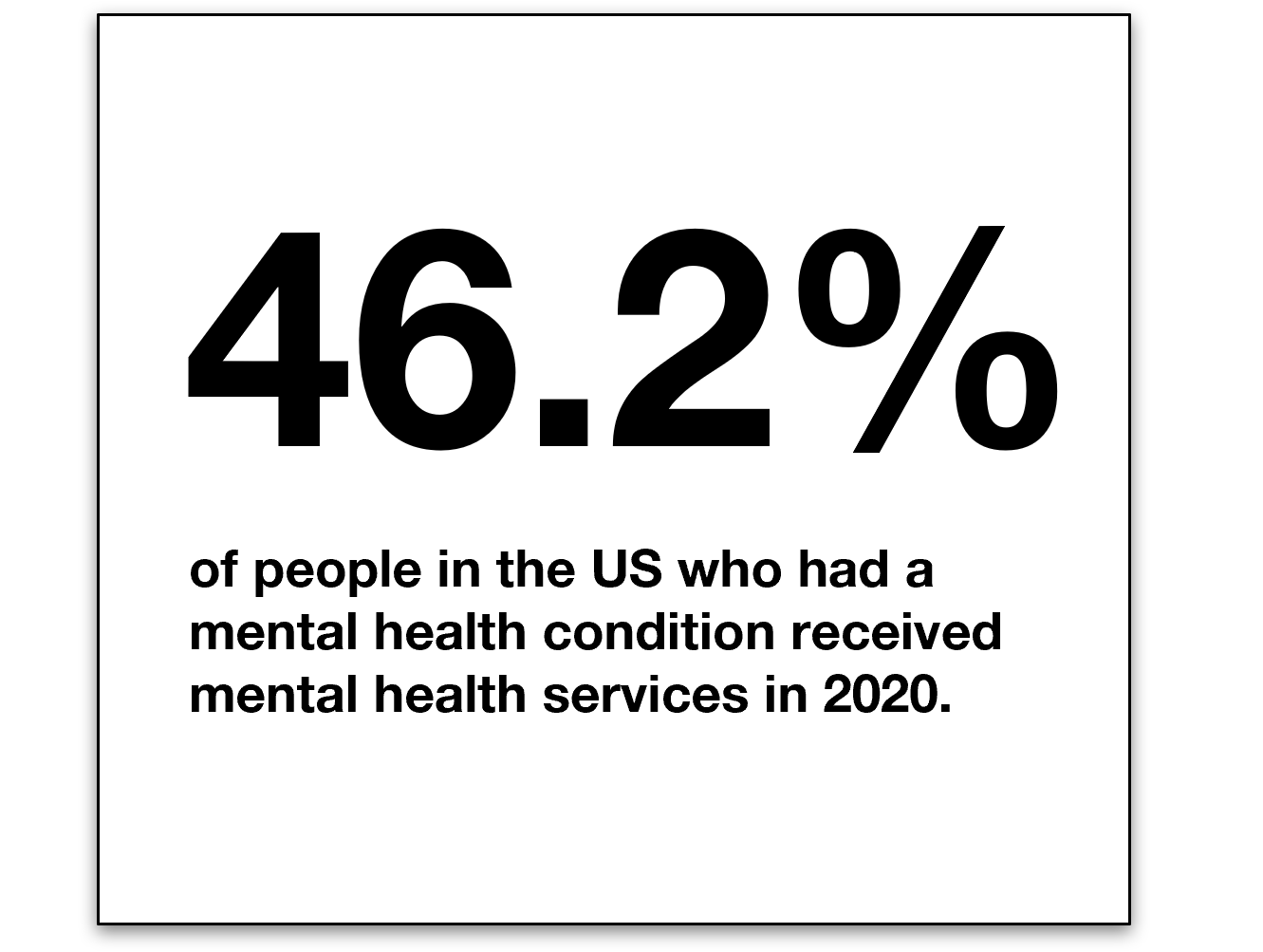

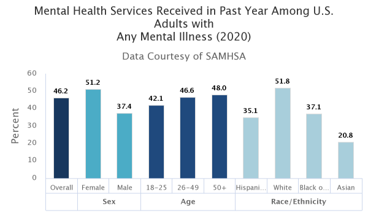

Less than half of the people identified as suffering from a mental health condition in the US received professional care in 2020, amongst whom shows a worrisome demographic disparity.

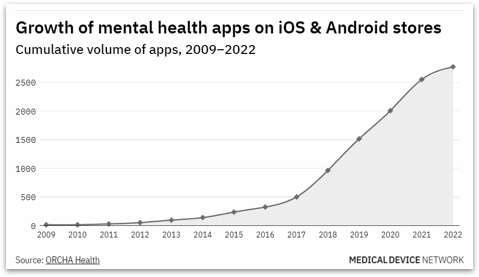

A rise in spending and popularity of mental health apps shows that people are looking for alternatives and more private coping strategies.

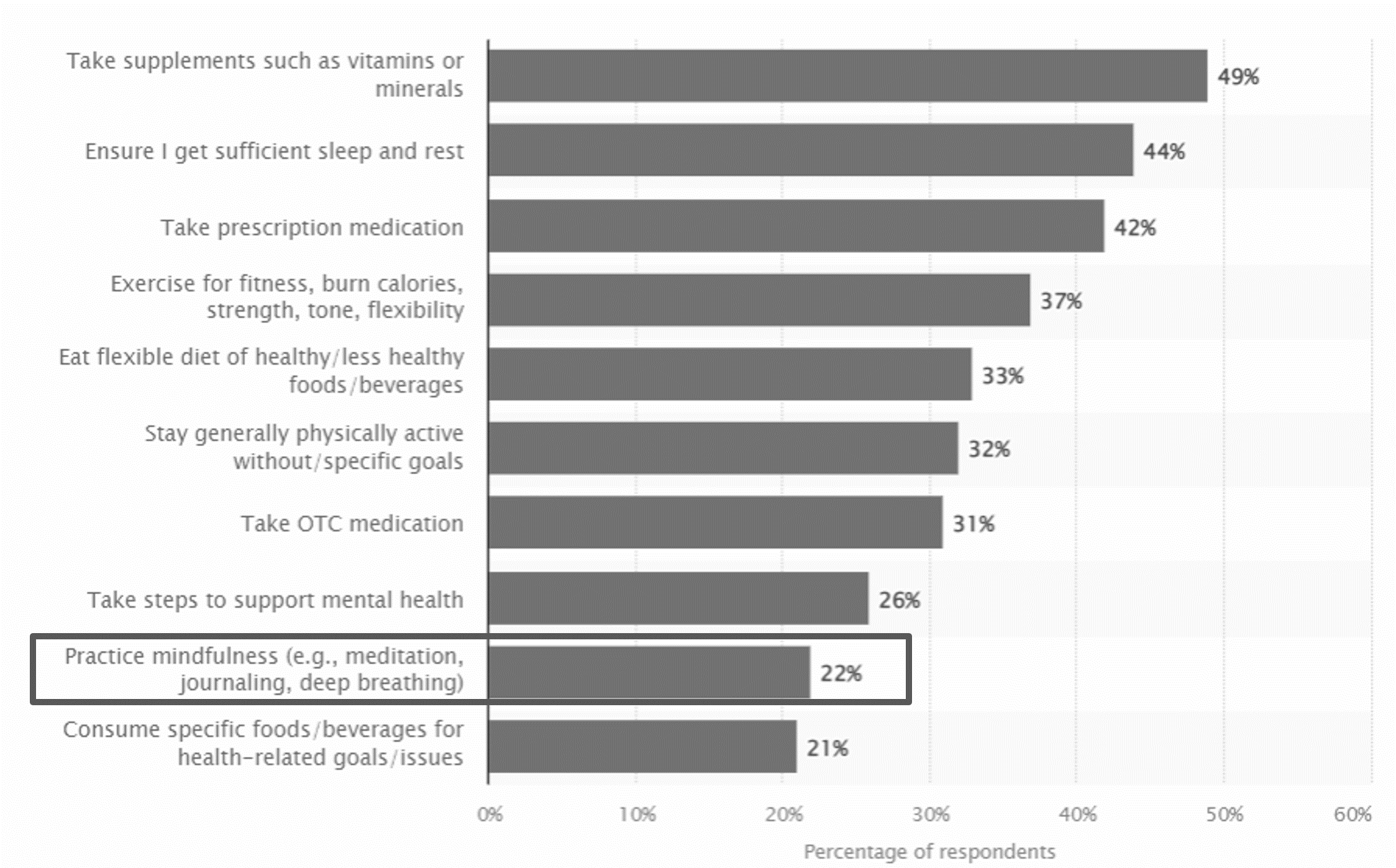

Mindfulness practices is one of the leading health strategies used by adults in the US in 2021, including meditation, journaling, deep breathing. (Statista)

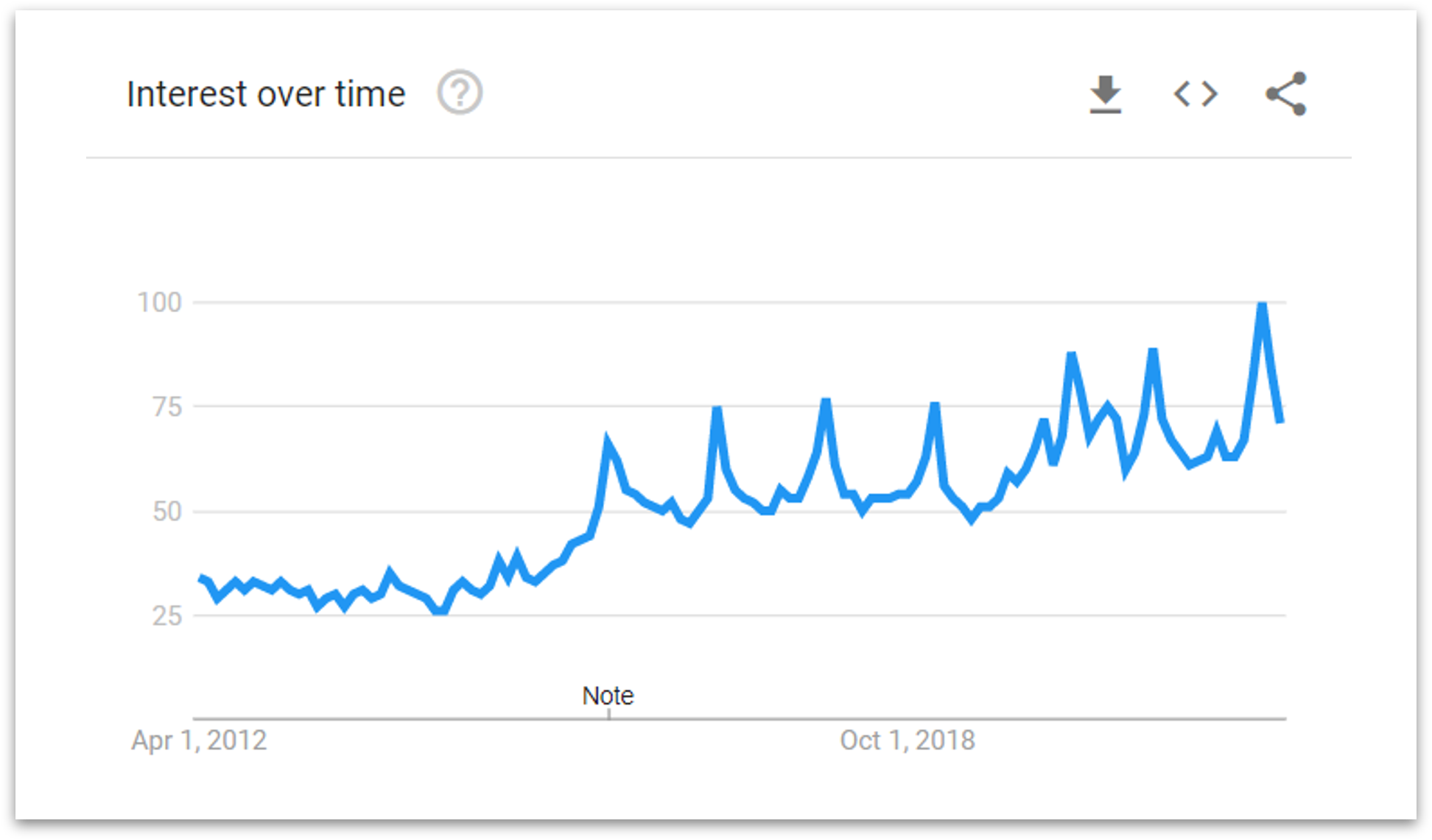

A growing low-investment mindfulness practice is personal journaling. Chart: Searches for "journaling" grew over the last 10 years. (Google Trend, keyword: "journaling")

→ Journaling As A Wellness Practice

The most obvious reason for personal journaling includes to reflect and

understand one’s own state of

mind. However, less known was the compromised effectiveness of journaling if carried out

incorrectly,

potentially leading to excessive rumination and obsession with certain problems.

Effectiveness of journaling for wellness depends largely on the approach to writing:

troubleshooting or ruminating, honest or avoidant, expressive or unemotional.

A study also shows that “objective writing” will not help reduce symptoms of mental illnesses, while

“expressive writing” will. (Niles, A. N. et.al (2013). Effect of Expressive Writing on Psychological and Physical

Health: The Moderating Role of Emotional Expressivity. Anxiety Stress Coping.)

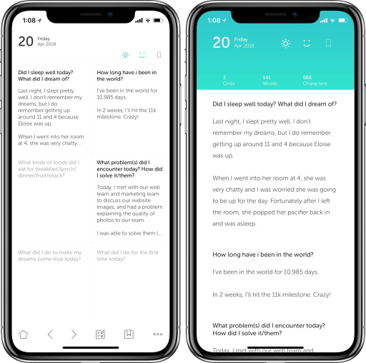

Current diary and journaling apps do not offer much more than regular

text

editors.

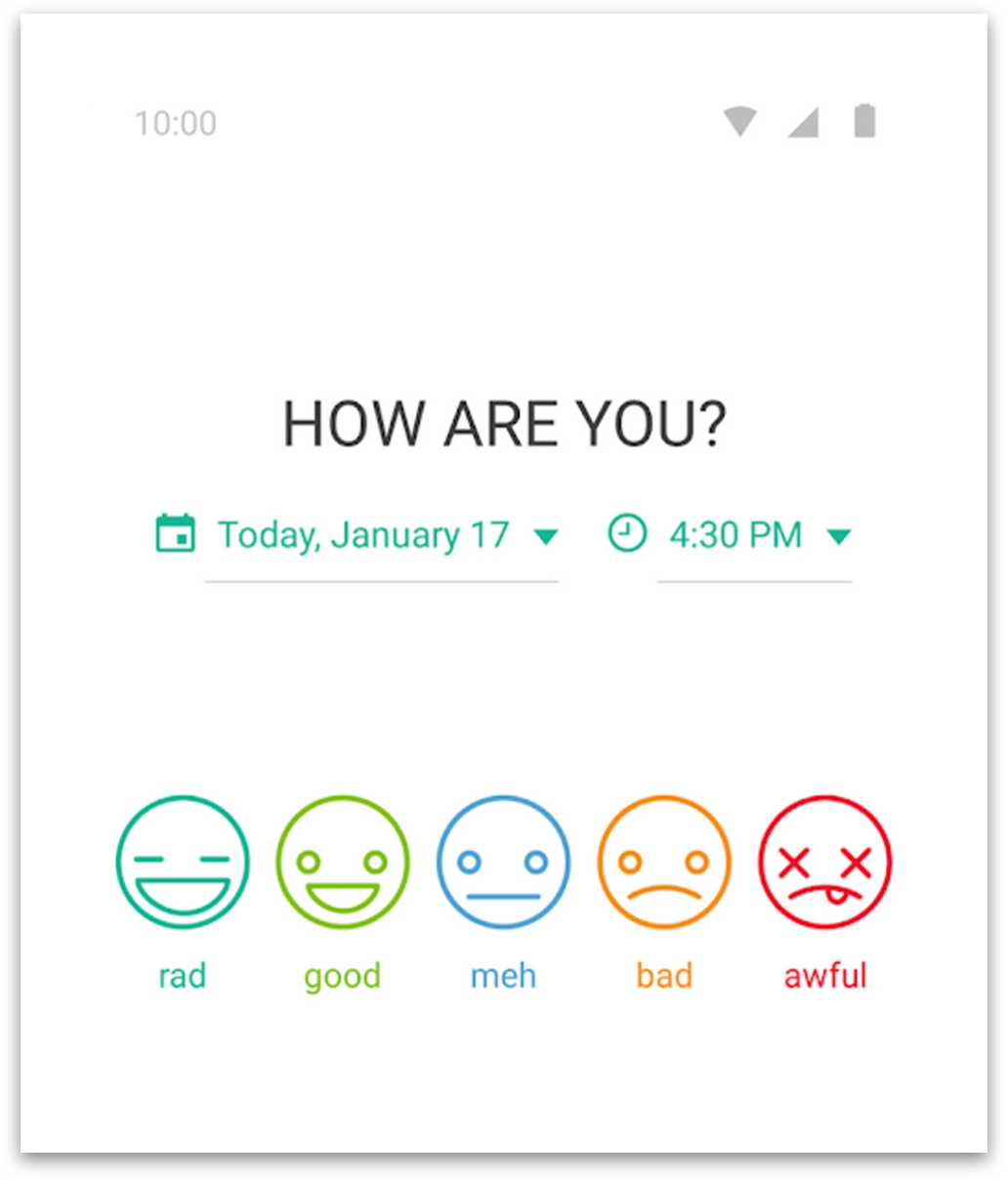

Its cousin, mood tracking apps, oversimplify the process through self-reporting, choosing from a

limited

scale of emojis. Selection of “mood” is often prompted before writing, which goes against the

natural

process of understanding emotions through writing and only reaching a conclusion afterwards.

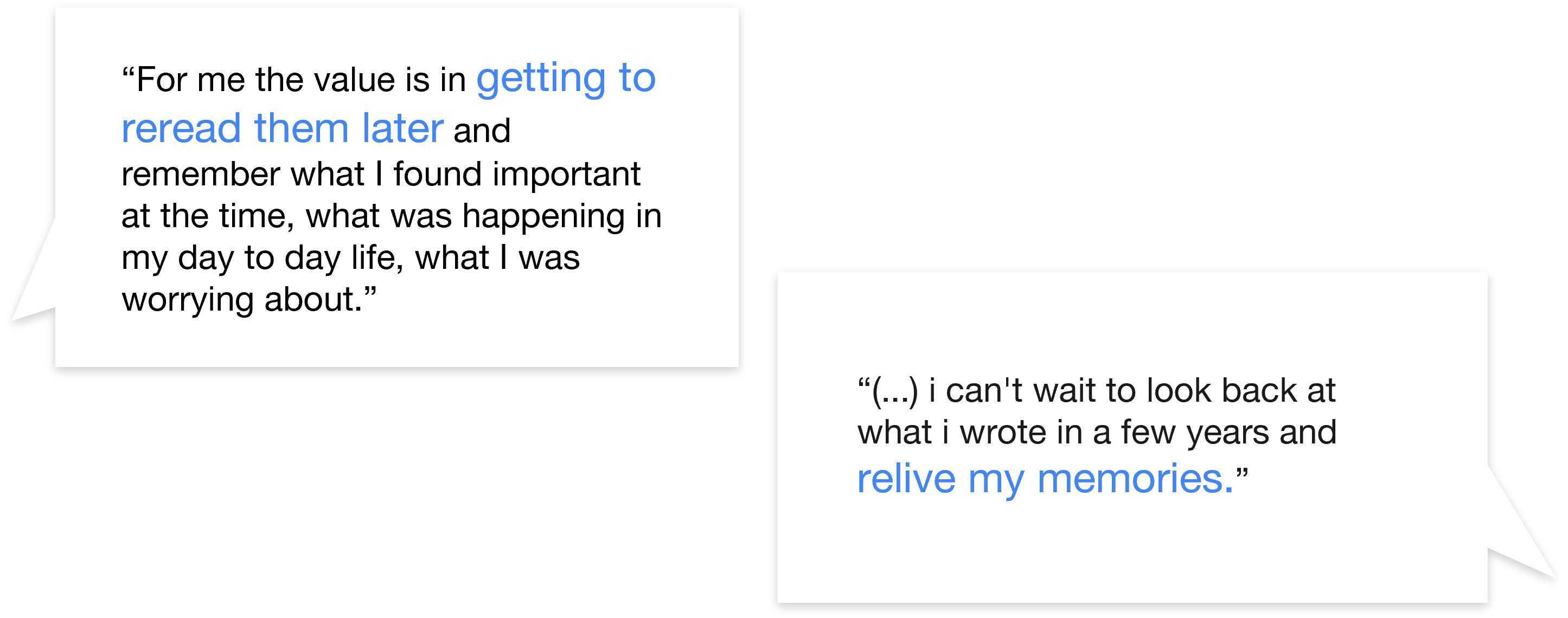

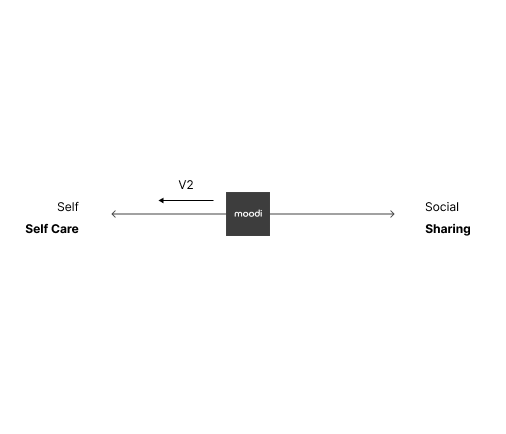

→ Journaling To Keep Memories

People also write to log memories and “immortalize” mortal happenings.

On the other hand, the writing is strictly of value to only the writer, unless they are comfortable

with

sharing or publicizing. Even to the writer, journals are not valuable unless time has passed. In

that

period of aging time, physical diaries are sitting and taking up space.

Diarists and journal keepers often end up wondering how to deal with old journals.

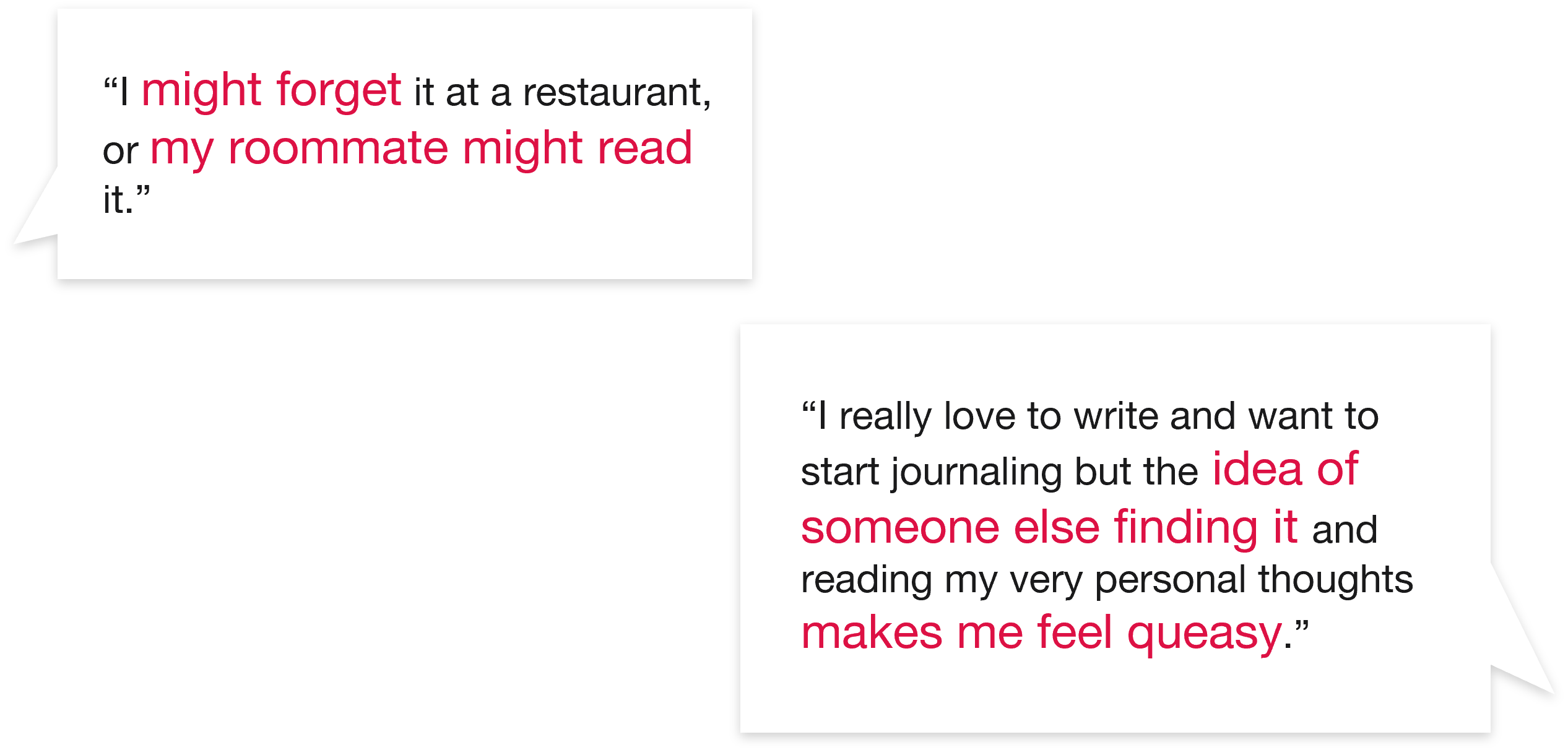

For journaling's critical use case, when the content should be kept extra private, writers often feel insecure and ashamed of keeping a “proof” of their thoughts.

Expectations

Reality

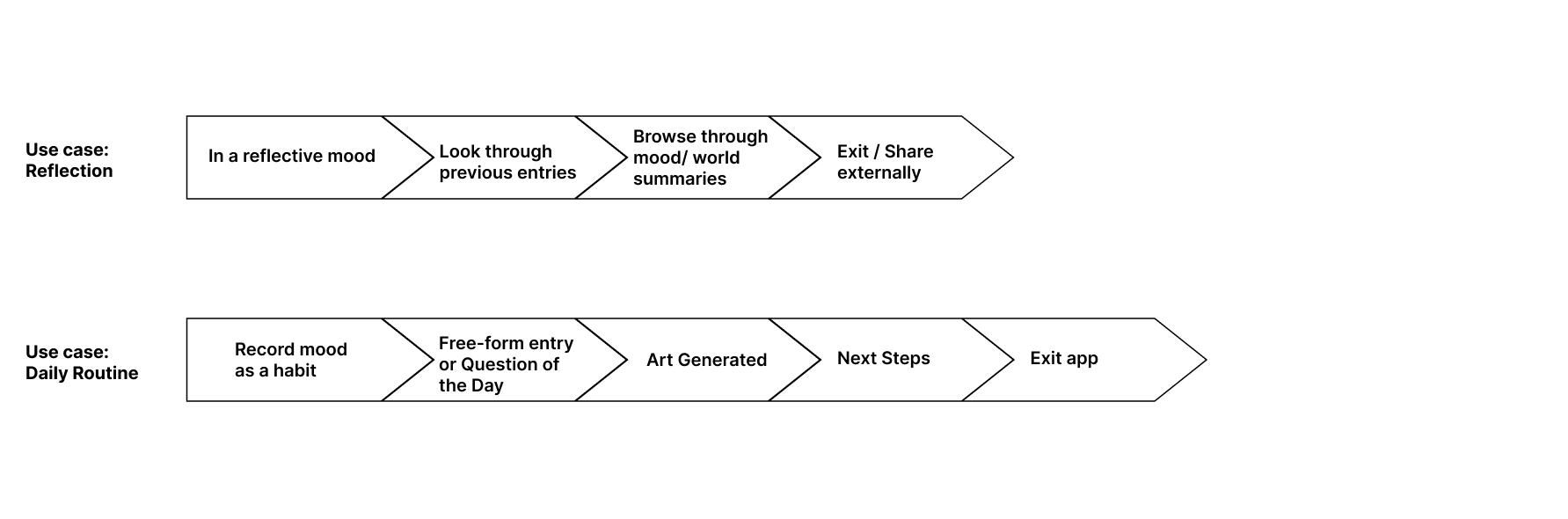

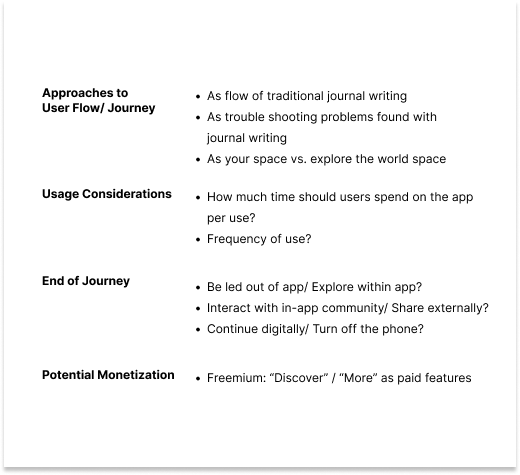

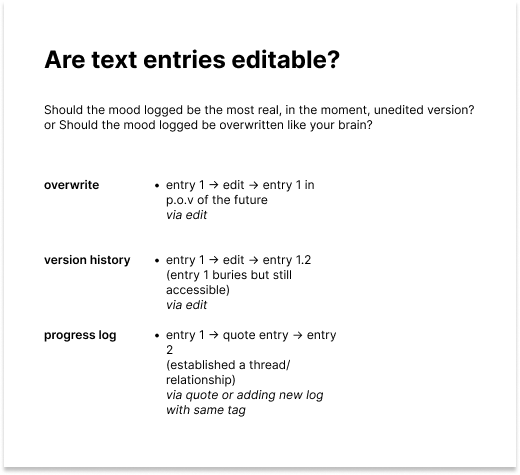

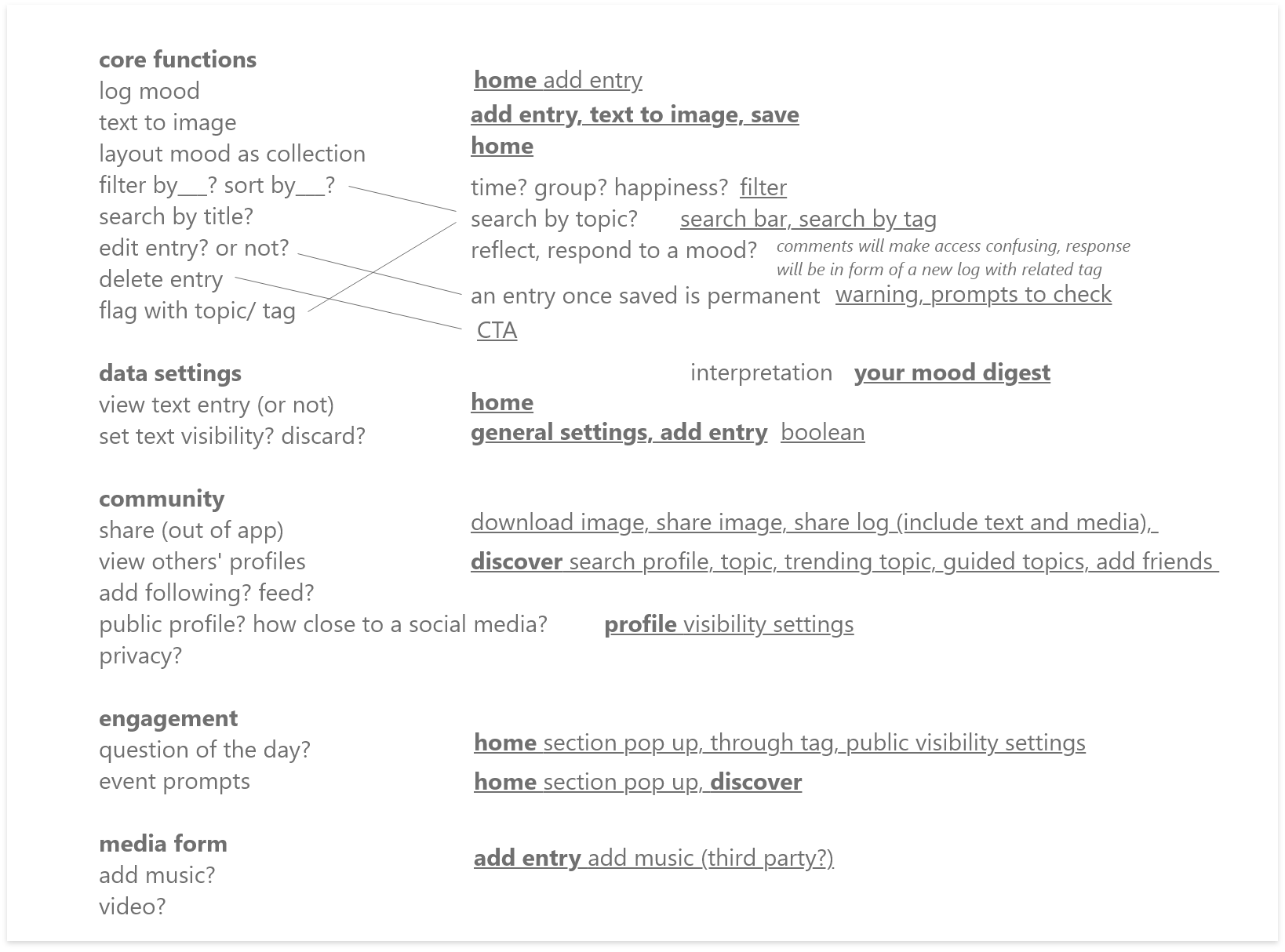

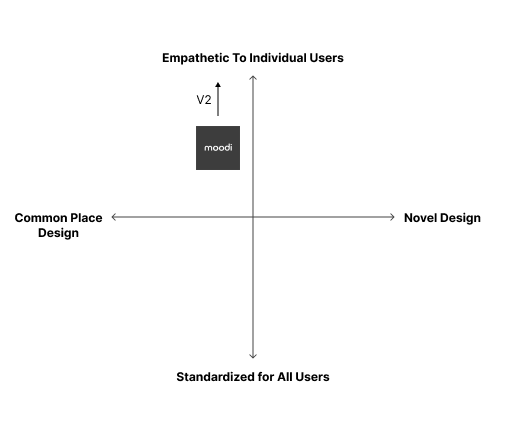

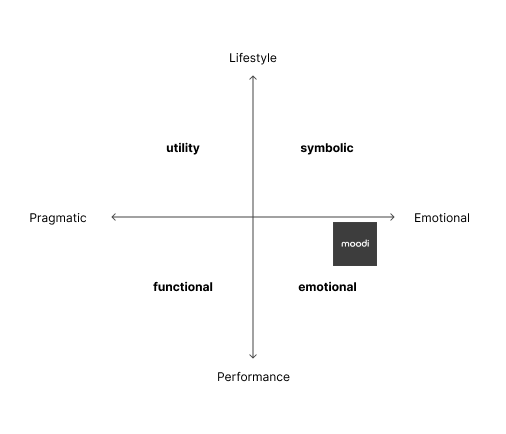

TLDR: Use cases and value offers are iterated and expanded upon in order to shape the moodi experience. An additional constraint at this step is my lack of programming capabilities combined with the project time frame.

Given the broad application potential for mood-based text to image conversion, narrowing down the features and bringing together one coherent product remain a big challenge.

Through a lot of brainstorming, weighing priorities using quadrants and trying to answer my own questions, moodi went from a web-app with only core functions to a mood diary mobile application with its own set of features.

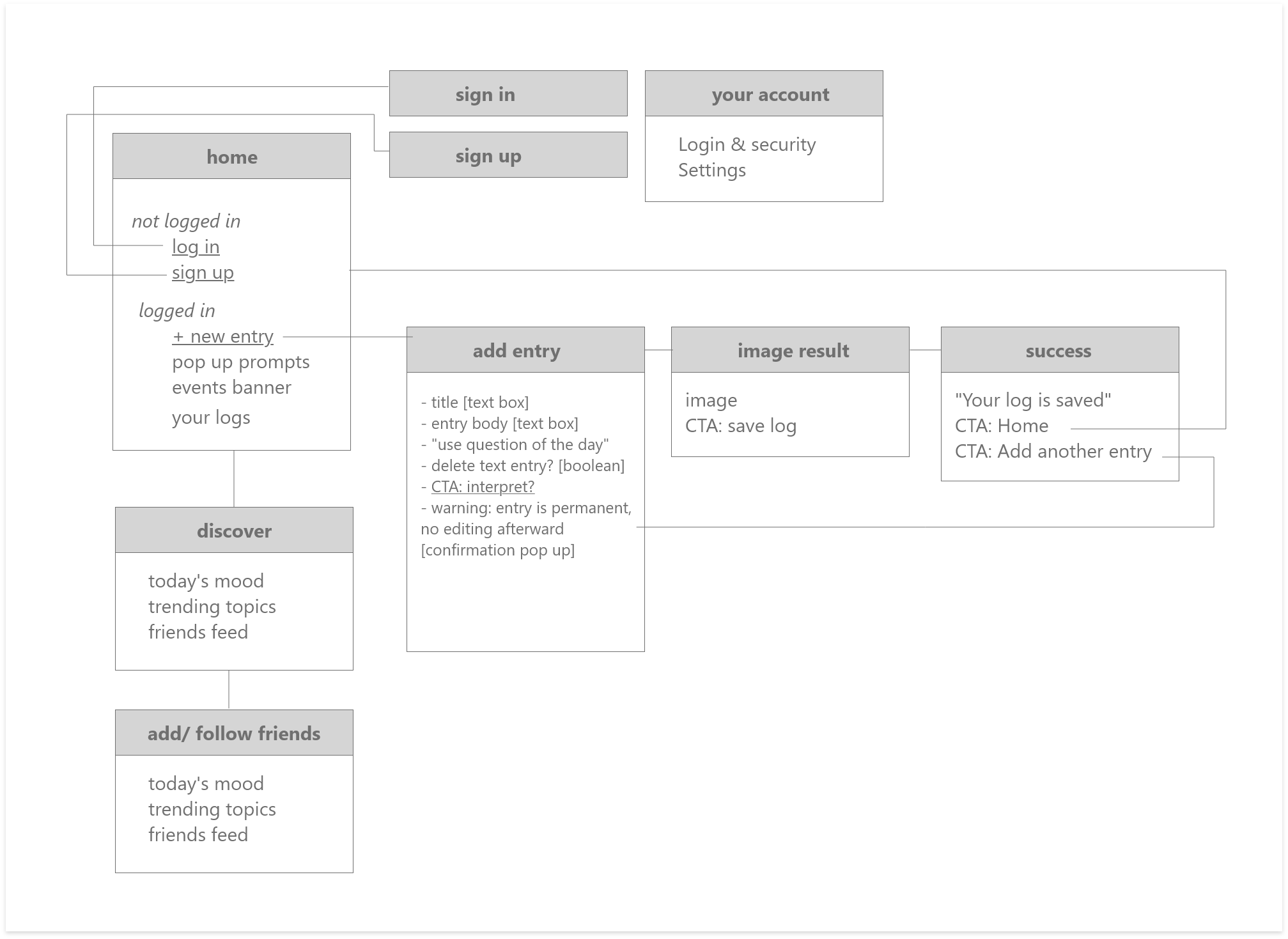

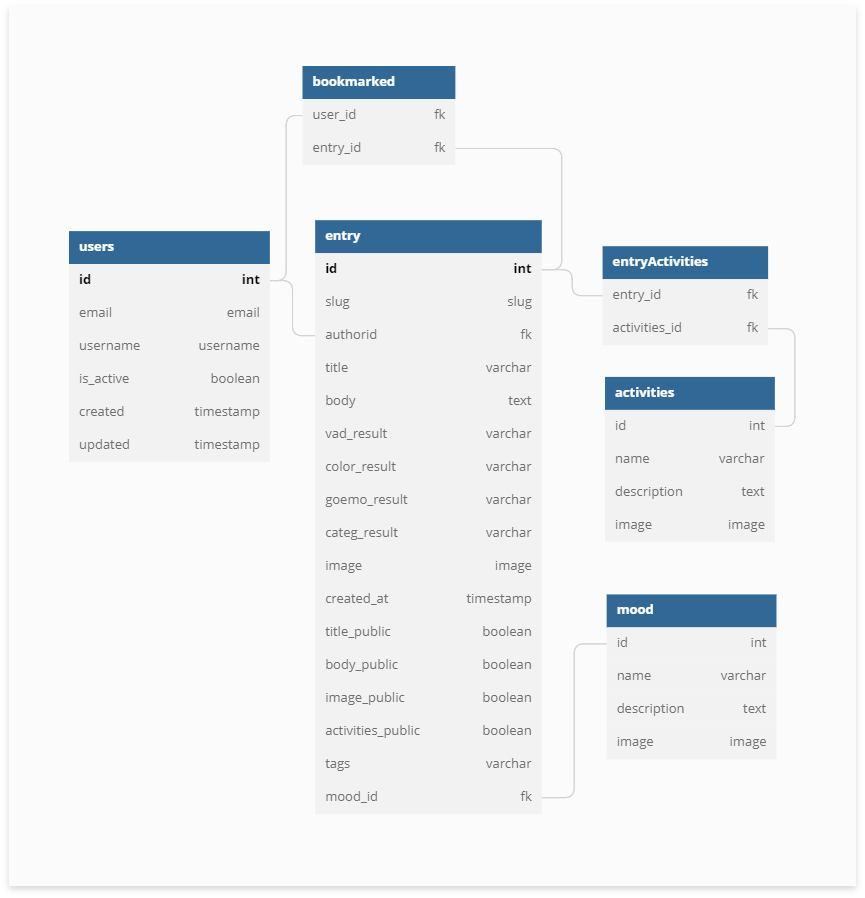

TLDR: After deciding on sets of features to carry forward came translating them into front and back end frameworks.

This version of the Android app is comparable to a functional wireframe. I focused a lot more on the holistic idea of the app, so the UI is kept stripped down. This is largely due to time constraints and my newness to Flutter and Material App as a front-end.

XD wireframe

Android app

Database created for moodi is admittedly not the cleanest, but it did the job for the first version. This version has yet to include data logging for the “World” and “Summary” sections.

The app is built on the basic backend of moodi web-app. Since P5.js was the main

requirement of the

previous project, this version's art generation portion was done through Flutter’s Webview, making

it

a

hybrid app.

To improve the app’s functionality, reliance on API should be minimized, making most functions local

for

convenience of use. Substituting out p5.js and html5 with Flutter's own version of canvas can reduce

delays and failures due to too many simultaneous API calls.

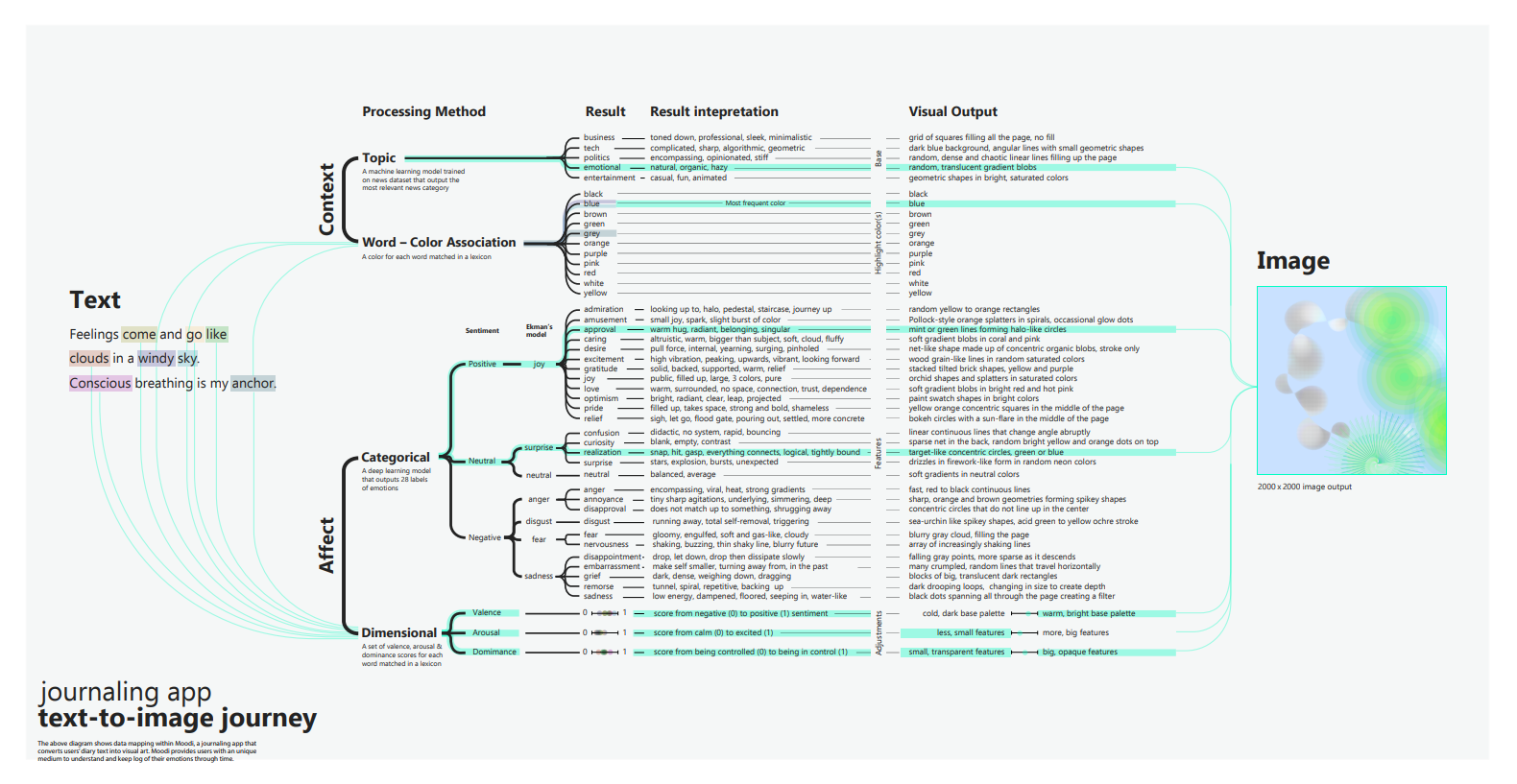

TLDR: A deep dive into analyzing a diary entry for emotions, then assigning visual attributes to the results. In this version, moodi does not generate art using machine learning to avoid complexities that comes with assigning emotions to existing artworks and visual assets.

Regular text-to-image models mainly focus on creating a literal

interpretation of

the text, generating

images by focusing on visual descriptions or nouns.

Existing sentiment analysis models only generate keyword results, e.g., anger, sadness, joy…

The emotional perception of a visual piece is still too subjective and human for machine

categorization,

hence a lack of data for machines to be trained for sentiment-based text-to-image generation.

It is my job to establish a bridge between what is available in machine learning, sentiment analysis

of

text, and a purely human act, art making.

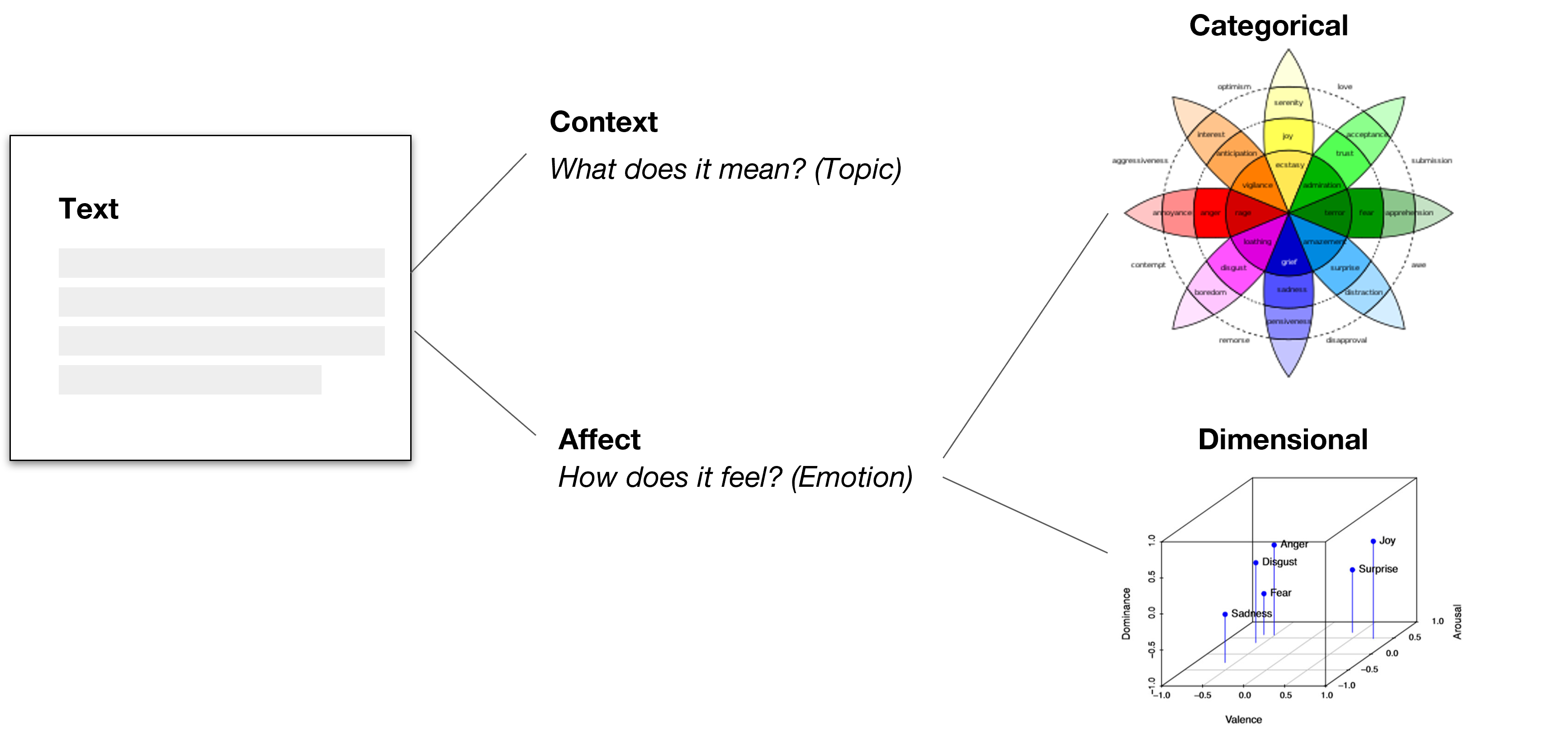

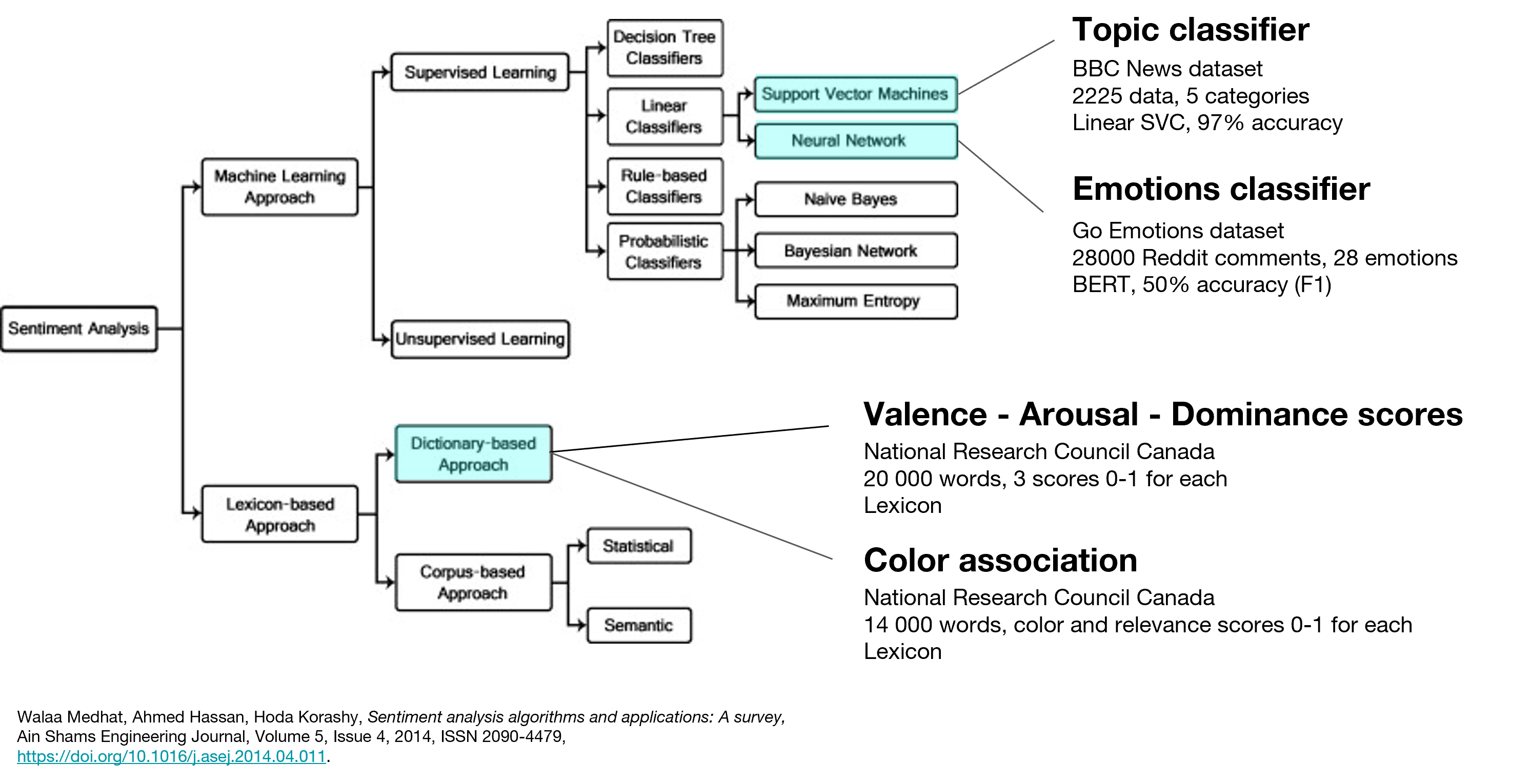

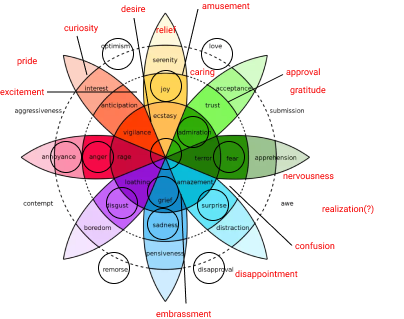

In order to approach this vast and already vague subject of sentiment analysis, I looked to scientific studies of emotions. Two main models of human emotions are used to this day: categorical (every emotion is a different combination of 6 basic emotions) and dimensional (emotions can be described as different levels of valence, arousal and dominance). For the most attainable accuracy, I determined to include both approaches in the moodi algorithm.

Four ways of analyzing text were chosen including two machine learning models and two lexicons, set to run simultaneously. The moodi algorithm analyzes text by both context and affect, through different levels of text (aspect level, sentence level, document level).

Diagram: Analyzing deep learning model trained on Go Emotion dataset and its results by mapping each onto Ekman’s emotion categorization model

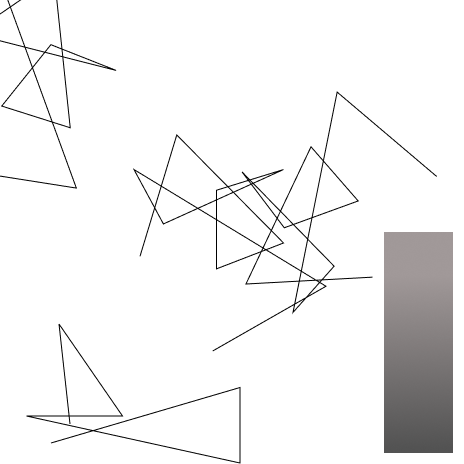

By not using a machine learning model that directly builds up images, I have the artistic freedom to determine what certain emotions will look like. Another advantage of this is I have total control of how each text-analysis model would affect the final outcome. On top of that, the generated image will not suffer from incompletion, absurdities and other typical output failures.

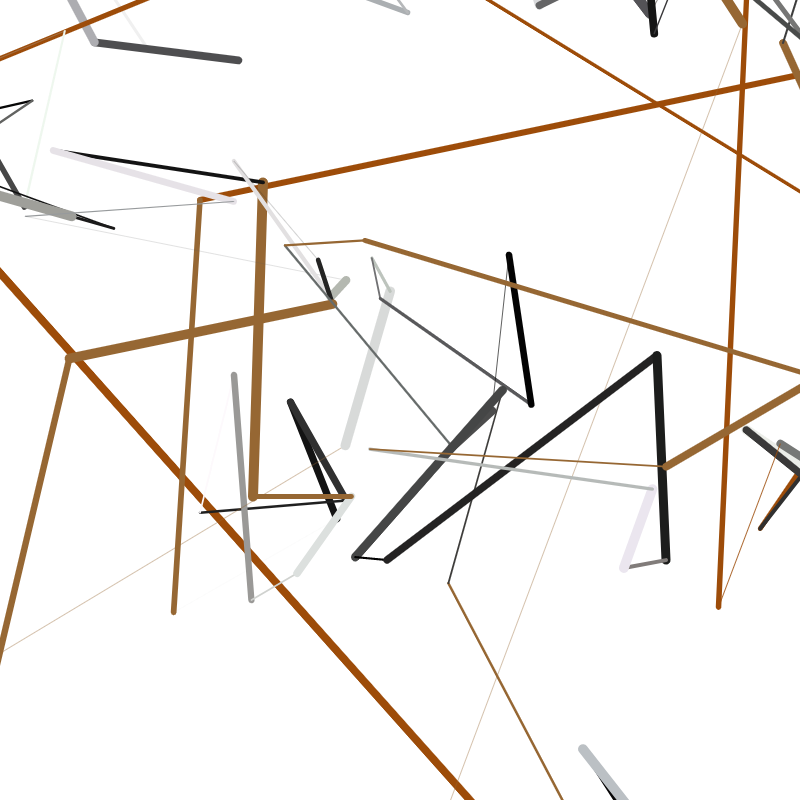

Processing Result →

confusion

My interpretation →

didactic, rapid, bouncing, random,

entangled

Output Sketch →

Generated Feature

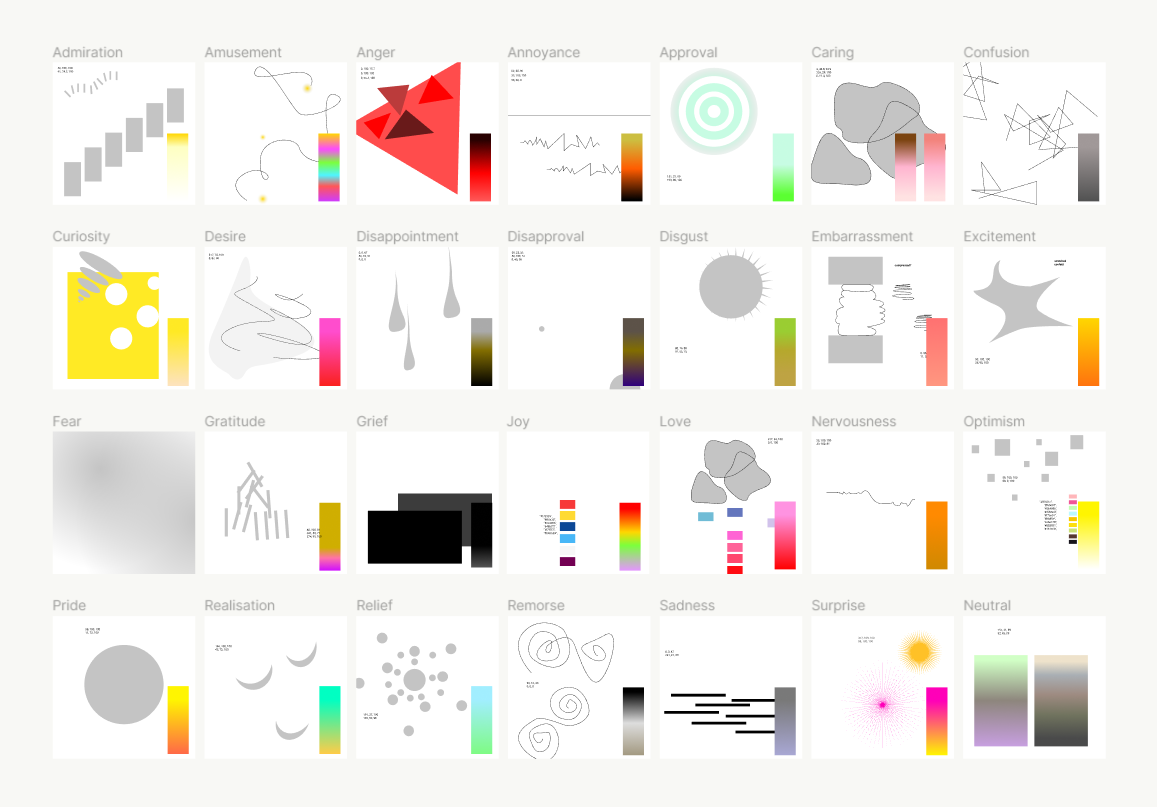

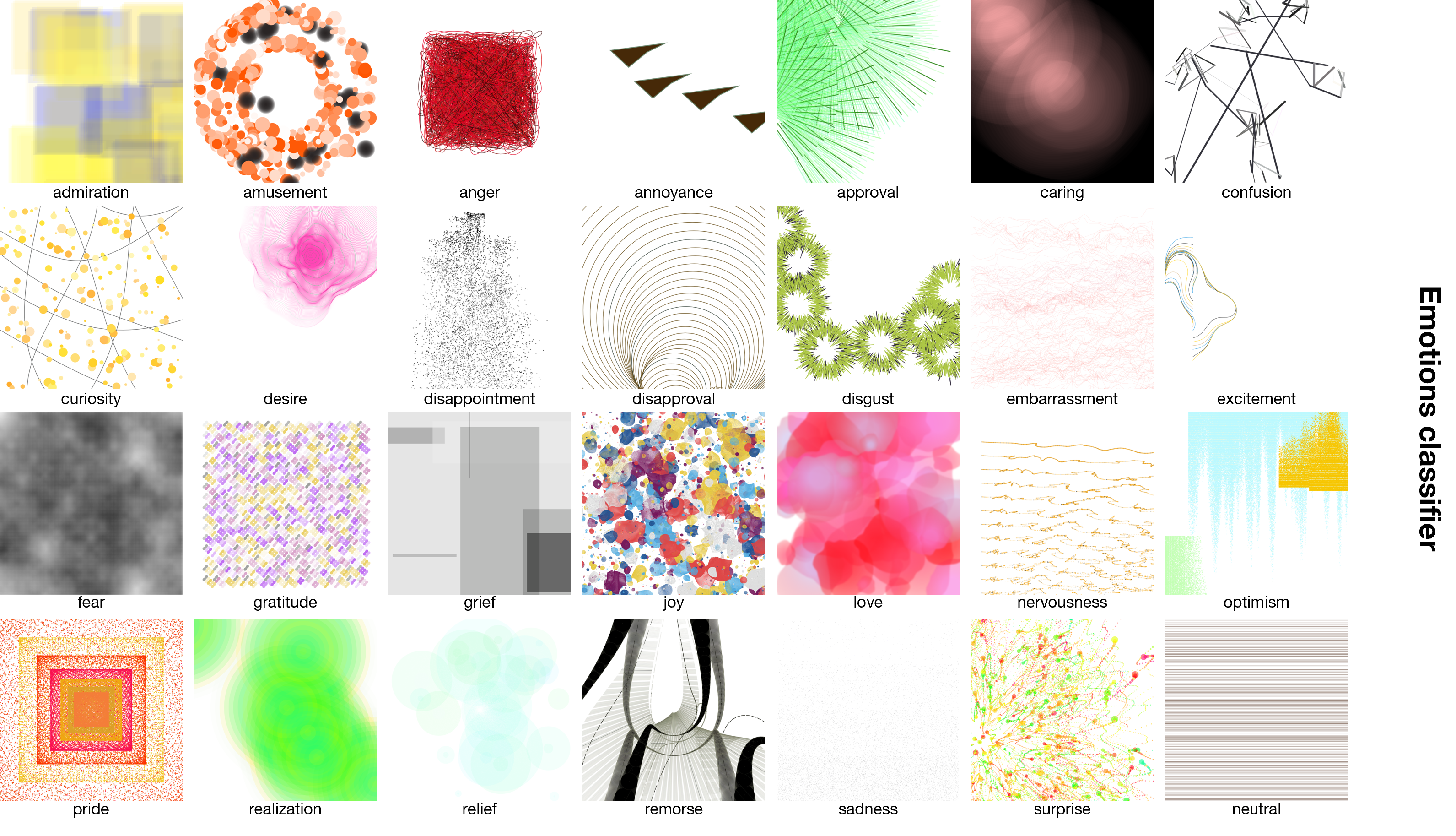

A different piece of generative art is assigned to each text-analysis

result.

Due to time

constraints of having to populate at least 33 piece of generative art, many of the outputs are

referencing

other digital artists’ code with adjustments.

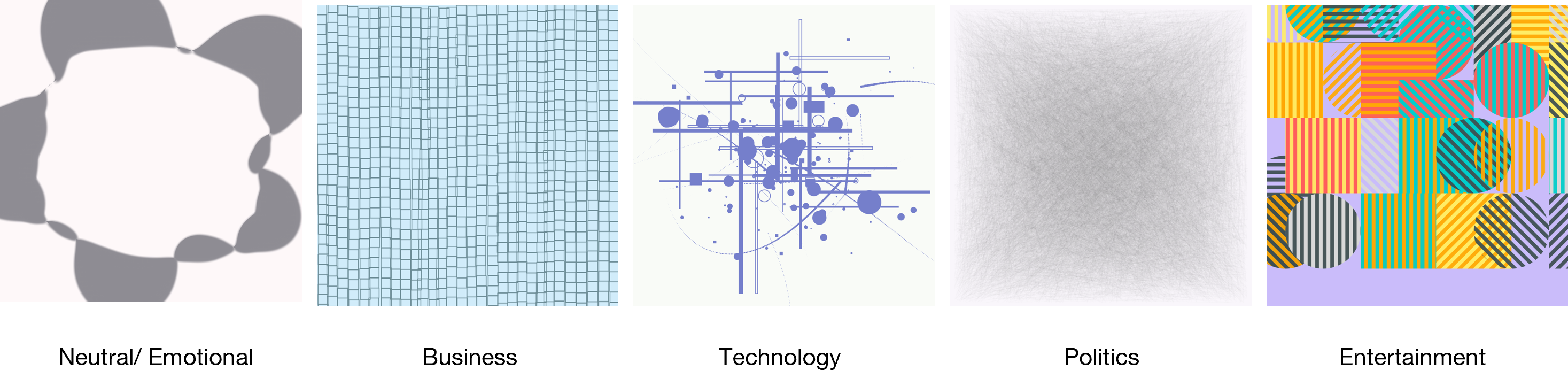

Generated Features (based on identified topic)

Generated Features (based on GoEmotions results)

Full mapping of algorithm

Moodi is a pretty big independent project that allowed me to explore outside the

scope of traditional design.

This project made me realize that this murky intersection of fields will be the future of designers and

artists. I learned so much about the study of emotions, natural language processing, sentiment analysis,

data

handling, how to manage an intimidating project, coding in a totally new language (Dart), asynchronous

programming… and the act of journal keeping.

Moodi has taught me to be ambitious, but at the same time reminded me that more is not always better.

The most

valuable feedback I received for this project summarized my current and future moodi journey: “Now that

you’ve

explored all possibilities, it’s time to take a big step back and narrow down features, and really work

on

what it feels like to use this app.”

I am not only excited to say “I taught myself to make an app!”, but most of all for the potential of

this

text-to-image algorithm that proved itself to be surprisingly accurate despite the slow approach I took.